Quantum computing is one of the hottest topics in the technology sector today. The technology is enabling individuals and companies to solve computational problems that were previously considered intractable. Cryptography, chemistry, quantum simulation, optimization, machine learning, and numerous other fields have been significantly impacted by this technology. While quantum computers aren’t going to replace classical computers — the ones used for browsing the web — immediately, quantum technology is significantly changing the way the world operates.

Research underway at multiple major technology companies and startups, among them Google, IBM, Microsoft, Intel, and Honeywell, has led to a series of technological breakthroughs in building “gated” quantum computer systems. D-Wave, has taken a distinctly different approach with a type of analog computing known as “quantum annealing.” Many entities are focused on developing quantum computing hardware since this is the core bottleneck today. Again, these include both the listed technology giants, and relatively new startups, such as QCWare, Rigetti, IonQ, and Quantum Circuits.

Quantum computing is generally viewed as a field of study centered on developing computer technology based on the principles of quantum theory — the area of physics that explains how matter and energy behave at the atomic and subatomic level. Designing these quantum systems to interact with each-other in specific ways, while engineering unwanted interactions with the environment out (the DiVincenzo criteria) is the general approach to developing quantum computers at scale.

The mechanics of quantum computing

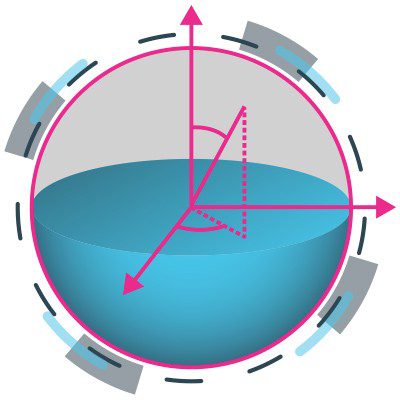

Quantum computing is a unique technology because it isn’t built on bits that are binary in nature, meaning that they’re either zero or one. Instead, the technology is based on qubits. These are two-state quantum mechanical systems that can be part zero and part one at the same time. The quantum property of “superposition” when combined with “entanglement” allows N qubits to act as a group rather than exist in isolation, and therefore achieve exponentially higher information density (2^N) than the information density of a classical computer (N).

Although quantum computers have a significant performance advantage, system fidelity remains a weak point. Qubits are highly susceptible to disturbances in their environment, making them prone to error. Correcting these errors requires redundant qubits for error correction and extensive correction codes, however useful applications of so-called Noisy Intermediate Scale Quantum devices, or “NISQ’s” is proceeding very rapidly. Improving the fidelity of qubit operations is key to increasing the number of gates and the usefulness of quantum algorithms, as well as for implementing error correction schemes with reasonable qubit overhead.

The evolution of quantum computing

Although the term quantum computing has only recently become a front and center topic in the public eye, the field of quantum information science has been around several decades. Paul Beinoff described a quantum mechanical model of a computer in 1980. In his paper, titled “The computer as a physical system: A microscopic quantum mechanical Hamiltonian model of computers as represented by Turing machines,” Beinoff showed that computers could operate under the laws of quantum mechanics. Shortly thereafter, Richard Feynmann (1982) wrote a paper titled “Simulating Physics with Computers” in which he looked at a range of systems that you might want to simulate and showed how they cannot be adequately represented by a classical computer, but rather by a quantum machine.

In 1994 Peter Shor, a researcher at AT&T’s Bell Labs discovered a quantum algorithm that enabled quantum computers to factor large numbers much faster than existing algorithms. This discovery caught the attention of many, and sparked significant interest in quantum computing, because it showed that quantum computers could break common (RSA) cryptography systems. Shor’s algorithm, as it became known, is discussed extensively in the academic paper titled, “Algorithms for quantum computation: discrete logarithms and factoring.”

After the creation of Shor’s algorithm, there have been a variety of innovations in the quantum computing industry such as the creation of scalable and mass-producible semiconductor chip ion trap in 2005 by the University of Michigan, which provided a potential pathway to scalable quantum computing.

In 2009, researchers at Yale University created the first solid-state, gate quantum processor. The two-qubit superconducting chip contained artificial atom qubits. Although the atoms could occupy two states, they still acted as a single atom. The details of this development are documented in the paper titled, “Demonstration of two-qubit algorithms with a superconducting quantum processor.”

In the last year there have been significant developments in the quantum sciences; all leading players in the superconducting camp have made their smaller chips accessible externally to software and service companies and preferred partners. Some have opened lower-performing versions and simulators to the community at large. This sign of commercial readiness has further strengthened the general expectation that quantum computing will achieve great strides in the near future.

Google with a (72 qubit) quantum chip called “Bristlecone”, IBM (with a 53 qubit processor) and Rigetti (128 qubits) have established or announced cloud access to their quantum programming toolsets and systems. The roadmaps of all these players extend out to systems with about 1 million qubits. These are just a few of the many developments in the rapidly evolving field of quantum computing.

The future of quantum computing

As quantum computing is such a broad field, the applications of the technology are limitless. However, a few opportunity areas for the technology include artificial intelligence, chemical simulation, molecular modeling, cryptography, optimization, and financial modeling. Those potential applications are discussed in more detail below.

Artificial intelligence

The combination of AI and quantum technology is often referred to as quantum artificial intelligence (QAI). Innovations in this space are essential for the development of algorithms used in computer vision, natural language processing (NLP) and robotics.

Molecular modeling

Processing information about chemical molecules and the human genome are two of the most complex tasks performed by modern computers. Although scientists can process some molecular models, simulating interactions between three or more molecules is extremely complicated.

Although developing computers powerful enough for these tasks is a formidable task, there has been significant innovation in the space, such as when IBM set a world record for quantum simulation.

Cryptography

The impact of quantum computing on cryptography has been established for more than two decades, beginning with the previously referenced work of Peter Shor that illustrated the threat quantum computing posed to common cryptographic standards.

Post-quantum cryptography, also known as quantum-resistant cryptography is an emerging field will grow exponentially in the coming years. The National Institute of Standards and Technology (NIST) and National Security Agency (NSA) have launched extensive initiatives to spur research and development, and eventually new standards, in the space.

Laying the groundwork for future innovations in quantum computing

Given the fact that quantum computing is only going to increase in complexity over time, it’s important for businesses and academic institutions alike to understand that the complexity of the quantum computing field makes it difficult for companies and academic institutions to develop innovations on their own.

In 2018, NIST joined forces with SRI International to form the Quantum Economic Development Consortium™ (QED-C™) to support the development of the quantum industry. Led by Joe Broz (Vice President of SRI) as its Executive Director, the QED-C is a consortium of stakeholders working to create a robust commercial quantum industry and associated supply chain.

The QED-C was established by the National Quantum Initiative Act signed into law to establish a 10-year plan to accelerate the development of U.S. quantum information science and technology applications. It includes manufacturers and users of components, systems, and products that enable, or are enabled by, quantum technologies.

The QED-C focuses on investment, use cases, enabling technologies, standards, and workforce needs. The consortium is designed to provide a forum for private industry and government agencies, including NIST, DOE, and DOD, to engage with a large cross-section of the quantum ecosystem.

The consortium is intended to enable technology R&D, facilitate industry collaboration and interaction with government agencies, and provide the U.S. government with an industry voice to guide R&D investment, standards and regulations, and workforce decisions.

If you are interested in having your company or institution become a QED-C member or participant, or would like to learn more information, you can contact the QED-C Executive Director, Joe Broz at joe.broz@sri.com or the Associate Director, Celia Merzbacher at: Celia.Merzbacher@sri.com