Harnessing the power of acoustic language processing to boost customer retention.

Speech is one of the defining traits of our species. Since human beings began to use complex verbal communication, we have used it to build our knowledge base and pass information across time and humanity.

Now, using AI-based voice analysis, business is able to utilize the intonations within the human voice to create better customer experiences.

Business churn rates

The last thing a business wants is to see customers leave. Loyalty is a vital part of a continuing healthy business. A survey from OTO Systems found that whilst 67% of customers report bad experiences as a reason for churn, only 1 in 26 customers make a complaint. Worryingly, 91% just leave, closing the door behind them.

Business needs to reduce churn. One obvious way is to be highly responsive and proactive with customers that do complain. This requires your business to lean in and listen carefully to that customer.

Here we discuss the power of the human voice in the hands of AI with OTO Systems.

What AI voice analysis tells you that your customers don’t

Human speech is more than just words. Albert Mehrabian, a non-verbal communication thinker, came up with a formula for speech that broke it down into three core elements (the weighting of each shown in brackets):

· Nonverbal behavior (facial expressions, for example); (55%)

· Intonation (tone of voice); and (7%)

· the actual spoken word. (38%)

The Mehrabian formula demonstrates that words are not everything. This goes some way to explaining why systems like Siri, that rely on words alone, have limitations; these types of systems miss the rich nuances of intonation.

Traditional data tracking platforms do not measure the experiential quality of the consumer. Customers that engage via calls, provide a huge opportunity to gather insights. Voice, as we can see from the Mehrabian formula, offers a rich data set; a view of what the customer feels. Analysis of these data can provide non-verbal direction that would otherwise remain hidden.

The power of a voice is being given force by the use of AI-based voice analysis in call center management. The insights gained by the AI analysis of voice is helping companies overcome customer churn. These AI systems offer the ability to accurately predict whether a user has had a negative experience and might be about to churn. This information offers a fair warning to take action and save angry customers from churning.

As an added benefit, AI-based voice analysis also enables businesses to coach employees on effective communication. Having a conversation that is optimized to detail a customer’s feelings can make the predictive nature of AI voice analysis even more accurate.

DeepTone: Translating our customer voices using AI

OTO Systems described how they train AI-based voice sentiment systems. OTO Systems use a large and diverse corpus of data as the training set. The company began in a language by language manner, building up across language data sets. They are now building more universal models that are applicable across different languages and contexts.

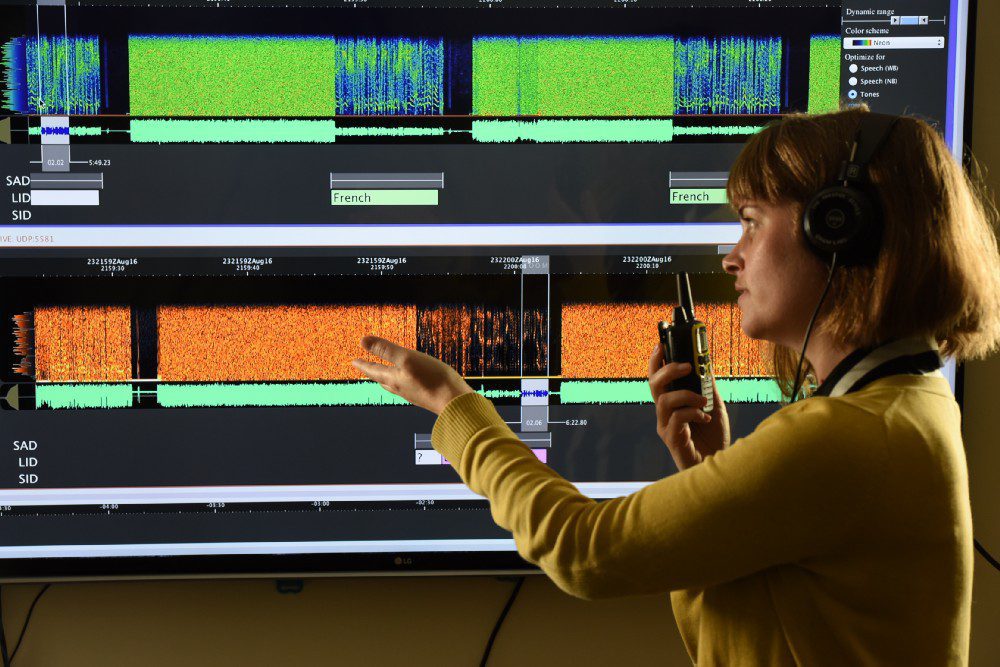

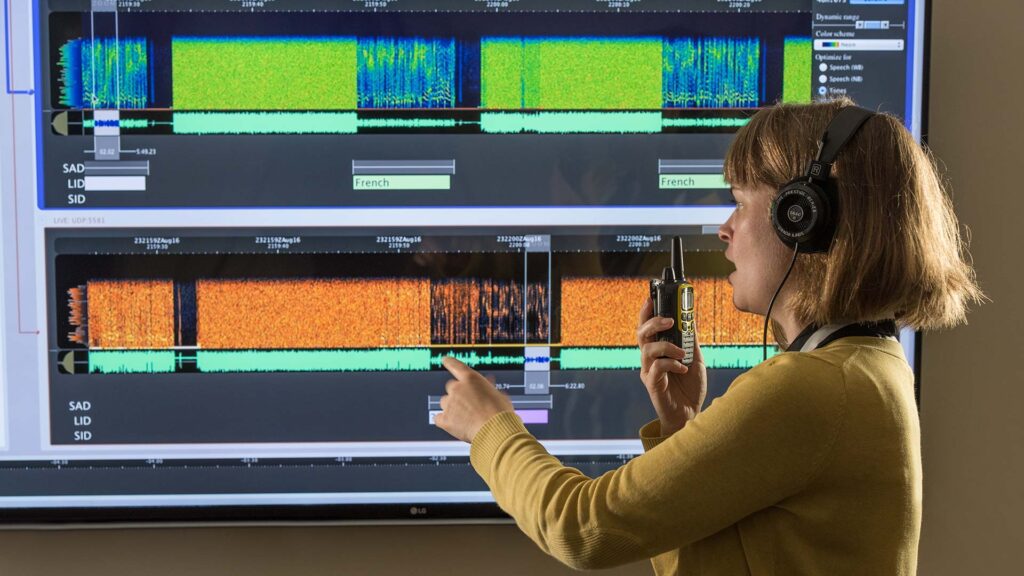

OTO Systems described the application of their state-of-the-art acoustic neural network, known as “DeepTone”. This AI mechanism creates rich and real-time representations using the human voice. OTO told us that their technology was inspired by human cognitive capabilities which effortlessly distinguishes new and different speakers in any given language.

DeepTone works in a similar manner to recognize subtle patterns in the audio waveforms that constitute the core acoustic identity of a speaker; this is achievable in any new conversation.

DeepTone learns from millions of conversations; in doing so, the acoustic representations (which are a type of pattern) become increasingly rich and powerful. This is key to break the next frontier in solving perceptual (or cognitive) tasks ranging from speaker separation (or identification) to deep behavioral recognition with unprecedented efficiency.

Building on the success of Siri

The Speech Technology and Research (STAR) Laboratory at SRI, had previously worked on Siri, which they then sold to Apple Inc., the rest being history. The lab further built an acoustic platform called SenSay Analytics™. This has the ability to extract a dense representation of the ‘acoustic space’ from the human voice. The intent was to go deeper into the understanding of speech, behaviors, and emotions. SenSay’s capability gave OTO a starting point to build our first set of solutions for contact centers.

With all great innovations come challenges in research and development. Technology transfer, in itself, is also challenging. This is especially true when the technology was not designed for large scale production. It took OTO Systems some time to understand SenSay’s capabilities and limitations. Intonation analysis is a new field and there continues to be many promising opportunities and areas of applied research which can truly transform how we understand human speech.

The future of AI-based voice analysis solutions

OTO Systems see a closer merging of both the words and the intonation. This will provide a highly granular understanding of how a particular word or even syllabus is uttered. This has major implications in the true understanding and meaning of what someone is actually saying; providing analysis of subtle nuances that are beyond human understanding itself.

The OTO systems engine is language agnostic. However, culture and certain emotions or behavior can affect results. OTO Systems, however, have already successfully applied a number of English models to German and French.

Although OTO Systems currently focuses on the call center industry, the applications of intonation are almost infinite. As OTO Systems said:

“We are entering a voice-first world. Soon you will speak with everything: your car, watch, fridge, speakers etc. and getting the nuances of speech will be key to creating meaningful conversations.”

Right now, OTO Systems are working on the human quality of conversations in contact centers. So far, it has not been possible to judge the experiential quality of a call based on text only, as it is too ambiguous. Using intonation, OTO Systems can coach agent engagement, i.e., operators can learn how to use the right energy and empathy with each customer; this is done in real-time and can predict if a customer is unhappy or not from intonation alone. The value for the call centers is that, for the first time, they can measure the quality of customer experiences and act on this information at scale, in real-time, to save angry customers from churning.

The Emotion Analytics Market is predicted to be worth $25 billion by 2023. This is because in a world where business is done across the globe and at massive scale, using voice recognition, with intonation intelligence, holds the key to better business decisions.