Speech & natural language

SRI’s speech and language technologies allow us to interact more naturally with computing applications and provide a wealth of actionable information about our intentions, health, and emotional state.

-

SRI’s AI-driven voice analysis could help screen for mental health conditions

Researchers at SRI are developing tools to help clinicians keep a close eye on depression, PTSD, and other mental health issues.

-

SRI is developing textiles that record audio

Turning piezoelectric materials and lithium-ion batteries into thread, innovators will weave fabrics that record sound.

-

Nuance Partners with SCIENTIA Puerto Rico

SRI spin-out Nuance Communications to expand access its Dragon Medical One for the island’s physicians and nurses

-

AI-based speech sentiment analysis technology

Enabling companies to automatically understand the intonation of the human voice.

-

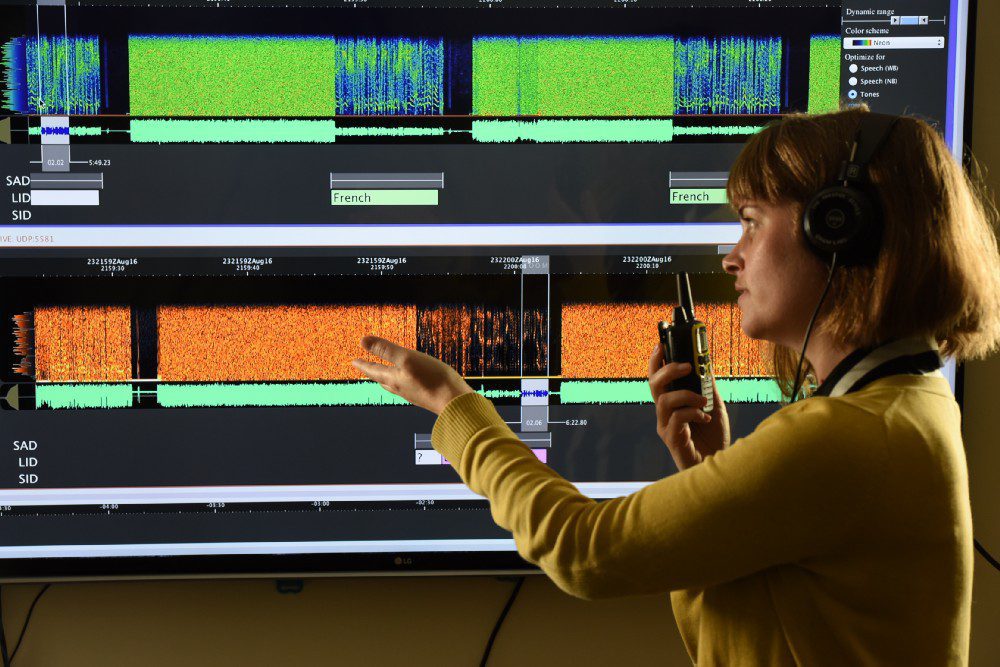

Aaron Lawson talks about the STAR Lab at SRI

Join us to learn about how STAR contributed to the technology that became Nuance, a commercially available speech recognition software, to their latest work in voice biometrics and speaker recognition.

-

VOiCES: SRI and IQT Labs collaborate on advancing speech research for far-field application

VOiCES is poised to power AI and machine learning research that could generate exciting advancements in speech tech.

-

75 Years of Innovation: Speech Recognition

Natural and automated speech recognition for wide-scale commercial application.

-

Unlocking the value of human speech

Harnessing the power of acoustic language processing to boost customer retention.

-

SRI International & SparkLabs Foundry Announce Strategic Global Partnership

SRI and SparkLabs Foundry join forces to deliver comprehensive innovation services to multinational corporations and government agencies.

-

EduSpeak® speech recognition toolkit

EduSpeak is a speech recognition toolkit specifically designed for developers of language-learning applications (such as for English as a Second Language, or ESL) and other educational and training software.

-

DynaSpeak® speech recognition engine

Hidden Markov Model (HMM)-based speech recognizer from SRI.