Computational sensing and embedded low-power processing

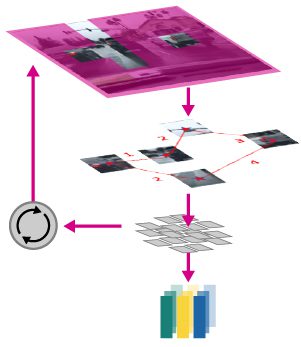

Computational sensing is where optics, sensing, and processing are combined to maximize the collection of information for a given application. Computational sensing systems provide real-time information capture of multi-modality sensors, special purpose optical paths and processing to extract signal information.

CVT has developed several computational sensing technologies and systems for government and commercial clients.

Embedded low-power processing

CVT has vast experience in developing low-power AI edge processing solutions for a range of platforms and applications. On DARPA Hyper-Dimensional Data Enabled Neural Networks (HyDDENN), CVT demonstrated non- Multiply Accumulate (MAC) quantized neural networks with 100X reduction in power-latency factor, performing real-time neural network reconfiguration, using a variety of commercial off the shelf (COTS) field programmable gate arrays (FPGAs). Building on HyDDENN, CVT is now developing our NeuroEdge technology to implement low-power edge computing on COTS graphics processing unit (GPU) FPGA and AI-Processors using our newly developed NeuroEdge Software Development Kit (SDK). For Advanced Research Projects Agency – Energy (ARPA-E) we developed a battery powered, multi-year operational occupancy sensor systems for household and commercial buildings to reduce building HVAC power consumption.

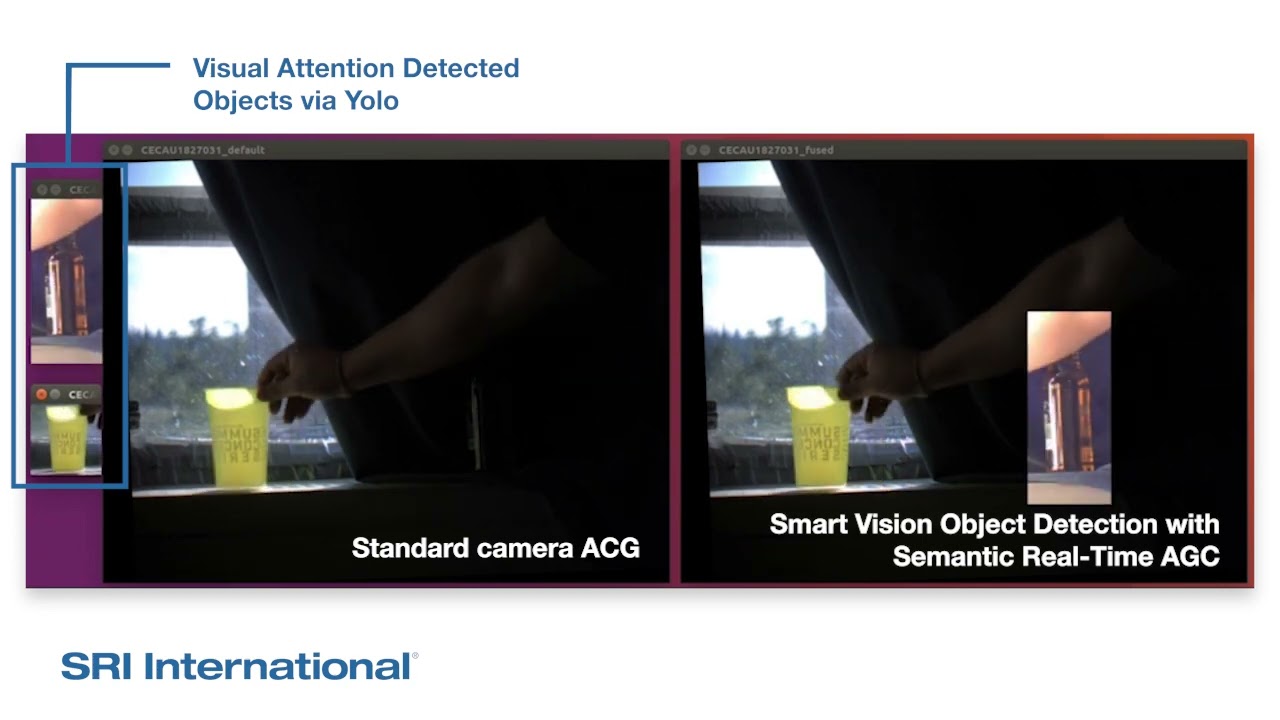

SmartVision

Multi-sensor fusion and visualization

Recent work

-

BASF selects SRI International to help refine and improve computer vision applications

BASF, the world’s largest chemical producer, identified Computer Vision as a critical technology for addressing a significant number of its global and societal challenges.

Recent publications

more +-

Low-Power In-Pixel Computing with Current-Modulated Switched Capacitors

We present a scalable in-pixel processing architecture that can reduce the data throughput by 10X and consume less than 30 mW per megapixel at the imager frontend.

-

Sensor Trajectory Estimation by Triangulating Lidar Returns

The paper describes how to recover the sensor trajectory for an aerial lidar collect using the data for multiple-return lidar pulses.

-

Learning with Local Gradients at the Edge

To enable learning on edge devices with fast convergence and low memory, we present a novel backpropagation-free optimization algorithm dubbed Target Projection Stochastic Gradient Descent (tpSGD).

Featured reports and publications

-

Hyper-Dimensional Analytics of Video Action at the Tactical Edge

We review HyDRATE, a low-SWaP reconfigurable neural network architecture developed under the DARPA AIE HyDDENN (Hyper-Dimensional Data Enabled Neural Network) program.

-

Bit Efficient Quantization for Deep Neural Networks

In this paper, we present a comparison of model-parameter driven quantization approaches that can achieve as low as 3-bit precision without affecting accuracy.

-

Generalized Ternary Connect: End-to-End Learning and Compression of Multiplication-Free Deep Neural Networks

The use of deep neural networks in edge computing devices hinges on the balance between accuracy and complexity of computations. Ternary Connect (TC) \cite{lin2015neural} addresses this issue by restricting the parameters to three levels

-

BitNet: Bit-Regularized Deep Neural Networks

We present a novel optimization strategy for training neural networks which we call “BitNet”. Our key idea is to limit the expressive power of the network by dynamically controlling the range and set of values that the parameters can take.

-

Low Precision Neural Networks using Subband Decomposition

In this paper, we present a unique approach using lower precision weights for more efficient and faster training phase.